Lessons from The Mozart Effect

Can listening to Mozart make your kid more intelligent?

The Mozart Effect wasn't the original quirky psychology finding. But it had a strange, long-lasting power during the 90s. And, for those who were paying attention, it should have been an early warning for the replication crisis in psychology 15 years later.

Here is one version of the story:

- In 1993, researchers find that having college students listen to a 10-minute Mozart sonata improved their IQ. There were 36 participants. The IQ outcome measures were three tasks from a section of a standard IQ test.

- The media pounces on the idea that classical music can improve intelligence, and dubs it "The Mozart Effect," despite cautions by the researchers against "distorting the... modest findings."

- Preying on the anxieties of parents and leveraging the imprimatur of scientific research, enterprising business people get rich selling classical music and related "high-intelligence" programs for infants, which ostensibly enhance children's intelligence. A Georgia governor even seeks funding to buy these programs for all children in the state.

- Later experiments, however, fail to replicate the effect. Within journal articles, there is the usual wrangling between the original researchers and replicators about the exact conditions necessary to replicate the effect and what the original researchers did or did not do. But, by the early 2000s, for many psychologists, the Mozart Effect became just another pop-psychology myth to do battle with.

Clearly, this should not be how science – and the public understanding of science – should work. With this version of the story, there's an easy villain: the media. Researchers were trying to do interesting, speculative work, but the media blew it all out of proportion. And I think that's partly true.

But the popular idea that listening to Mozart could improve intelligence wasn't just a case of "transforming exploratory research into confirmatory findings". Rather, it was a conglomerate of distortions – the same kinds of distortions of scientific evidence that go on today. Consider all of the leaps of logic that it takes to go from the original research to the "Baby Mozart" business idea:

Population differences: the study had nothing to do with child development and the "theory" underlying the research had nothing to do with child development (more on this theory in a moment). But the most profitable application of the research – if you could call it that – was about improving children's intelligence. Presuming that findings from one population extend to another one isn't always a bad idea, but the leap from short-term adult change to long-term child development is a big one.

Exposure, time frame, and the additive assumption: It was ten minutes of listening to a Mozart sonata. And the boost in spatial reasoning occurred immediately afterward (the original researchers even suggested that this would not be a long-term effect). But months and years of exposure – in some cases, even in the womb – was supposed to impact intelligence far into the future.

The extension of effects: the outcome measure in the original study was about spatial-temporal reasoning – really just small part of one section of a Stanford-Binet intelligence test. But companies' claimed their products would improve general intelligence – something that was never tested for originally and never established in any of the follow-up research.

But was it just a case of "there goes the media again?" It's an appealing argument because I can almost hear a cheery 90s news anchor say, "Can listening to Mozart make you smarter? We'll find out after the break..." But there was also some truly sloppy science going on.

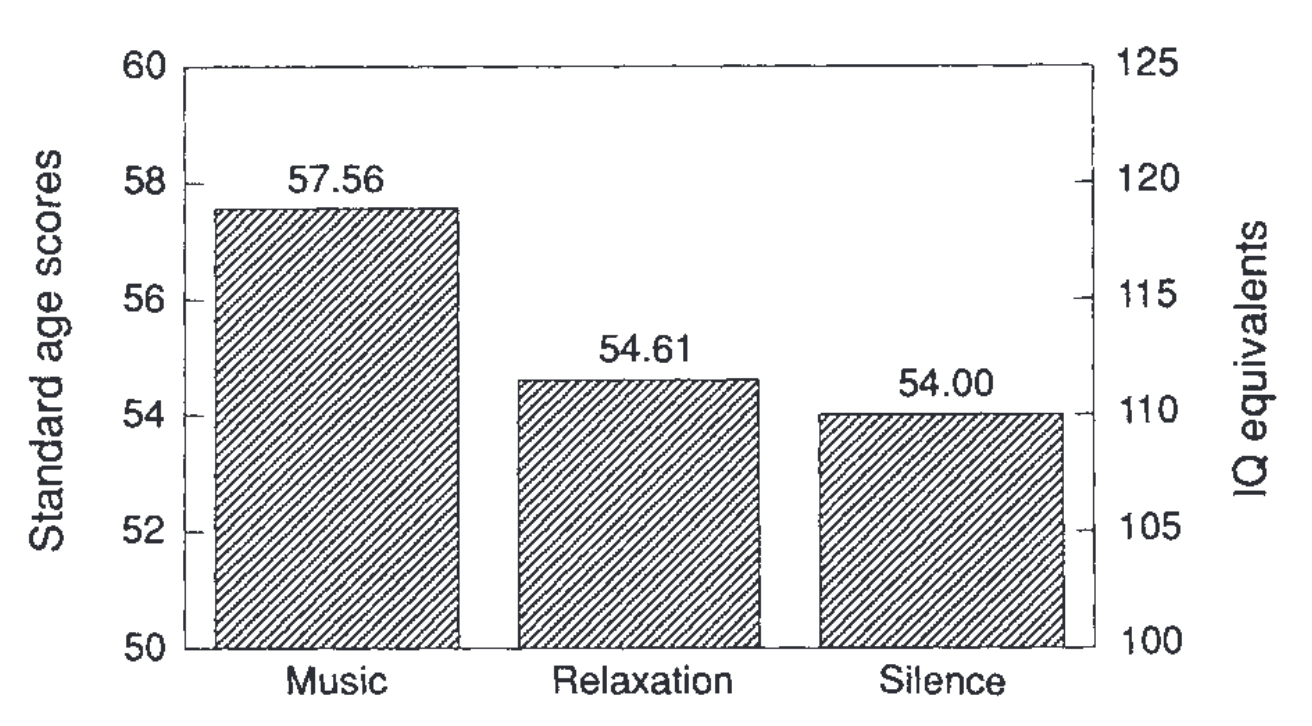

Take a look at this graph from the original paper:

For those of you who read How to Lie with Statistics, this is a standard "USA Today"-level tactic: start the y-axis on a bar graph from some higher-than-zero point so you can emphasize the difference between the groups. Yes, they may have gotten a significant effect – and sometimes small differences are quite important – but I think the appropriate response here is a resounding shoulder shrug – or, at most, a slightly raised eyebrow.

There are some times when having dual y-axes (one measure on one side and another on the other) makes sense, though as a general rule I think researchers should avoid this practice. It doesn't make sense here because the researchers do something squirrelly by mathemagically determining what the equivalent increase in IQ would be if the participants had taken a full IQ test. It's kind of like saying, "I gave two students ten questions from a recent SAT test; one student answered 8 questions correctly and another answered 7. This would be equivalent to an increase in 40 points on the full SAT test" You haven't given them a full test; so it makes little sense to talk as if you had.

Clearly it wasn't enough for the researchers to just find a difference on these spatial-temporal tasks – they wanted to relate it to overall IQ. Why? The only thing I can think of is that IQ sounds better. What was a small difference in spatial-temporal reasoning becomes a "9-10 point" difference in IQ (keeping in mind that IQ scores are standardized to have a mean of 100 and a standard deviation of 15 – so this is 2/3rds of a standard deviation – a massive gain).

Of course, as replications testing variations on the tasks failed, the original researchers insisted that their finding had nothing to do with general IQ in the first place and was specifically about spatial-temporal reasoning.

All of this was accompanied by methodological sloppiness. The report on the experiment can barely be called a paper. It's a handful of paragraphs. Later researchers who tried to reconstruct what happened had to rely on every scrap of evidence from the original researchers – formal or informal – and even then the reconstructed experimental protocol doesn't quite make sense.

But the more fundamental problem is why are they doing this research? The original researchers were apparently interested in the idea because spatial-temporal reasoning tasks and music activated the same areas of the brain. So if you primed these neurons (aligning them in some way) with one activity, you might get better performance in the other activity. This idea was called "the trion model". But, as far as I can tell, it wasn't much of a model.

In the beginning, it was the trion model that supported the empirical tests. But later researchers wrote as if the empirical findings (i.e., the findings in support of a Mozart Effect) supported the trion model. But – at best – the trion model is a basic prediction ("if you do X, then Y will happen."). And the Mozart Effect finding would be some evidence about the validity of that prediction. But predictions are not hypotheses and they are certainly not models.

After years of extensions, failed replications, and general exploration about the nature of the original findings, no one had any idea what the mechanism causing the effect might be. Was it a mood thing – did the Mozart sonata just make people feel a little happier, which improved their performance? Possible. Was it a preference thing – maybe people just preferred the sonata over the alternatives? There's at least some support for this idea – one research group got the same effect from people who preferred listening to narration. Would any other music cause the same effect? Researchers reused the same ten minutes of a Mozart sonata in nearly every experiment. Some research groups found a similar effect with Yanni and Schubert pieces; others couldn't even replicate the Mozart finding.

In the absence of any good explanations about what was going on, the experimental search space was very broad. Even if the original research finding had been supported through replications, it's a dangling thread. No one can say what it means about the brain or about intelligence or about musical skills or what the next experiment should be; the most you might say is that there is some vague connection between spatial transformations across time and music.

Is there an overall lesson here? Let me go with precision. Sloppy joes are for the dinner table – not for science. There was sloppy theorizing, sloppy methodology, and sloppy reporting on the science side; wild extensions of the experimental findings in media and business; and, as ever, an appetite for easy, get-intelligent-quick schemes from consumers.

The good news is that, after reading these journal articles and the media reports on the Mozart Effect from the 90s... I think we've gotten better? All of the things I mentioned above still happen, of course, but opposing, critical views push back more successfully on findings like these.

REFERENCES

The latest review of the literature on the Mozart Effect that I read is here, although I've noticed some researchers continue to cite the effect as "real" into the 2000s and 2010s:

Pietschnig, J., Voracek, M., & Formann, A. K. (2010). Mozart effect–Shmozart effect: A meta-analysis. Intelligence, 38(3), 314-323.

The main finding is that there seems to be some small effect that music has (compared to no-music, passive control conditions), but little evidence that the specific Mozart sonatas matter. They favor an arousal explanation (more arousing music leads to better performance on the tests, but the effect is rather small). They also found evidence of publication bias in favor of experiments that compared the sonata to no-music control conditions.

The original 1993 paper is:

Rauscher, F. H., Shaw, G. L., & Ky, C. N. (1993). Music and spatial task performance. Nature, 365(6447), 611-611.

A follow-up replication/extension from the original team is here:

Rauscher, F. H. (1994). Music and spatial task performance: A causal relationship.

Below is a readable criticism from the time making some of the points I made above:

Steele, K. M., Bass, K. E., & Crook, M. D. (1999). The mystery of the Mozart effect: Failure to replicate. Psychological Science, 10(4), 366-369.

On the Mozart Effect being really about students' preferences:

Nantais, K. M., & Schellenberg, E. G. (1999). The Mozart effect: An artifact of preference. Psychological science, 10(4), 370-373.

The paper below is one of the more extensive analyses of the original studies. The authors painstakingly reconstruct what the original study protocol might have been.

Fudin, R., & Lembessis, E. (2004). The Mozart effect: Questions about the seminal findings of Rauscher, Shaw, and colleagues. Perceptual and Motor Skills, 98(2), 389-405.

This book, which I think is otherwise quite good, paints the Mozart Effect as essentially a media (and not a science) problem. That's where the "distorting the modest findings" quote comes from.

Hirsh-Pasek, K., Golinkoff, R. M., & Eyer, D. (2004). Einstein never used flash cards: How our children really learn--and why they need to play more and memorize less. Rodale Books.

How to Lie with Statistics by Darrell Huff and Irving Geis is a classic book on basic tactics that people use to distort data. Great for highschoolers.

Just FYI, links to bookshop are affiliate links, so I get a kickback if you buy from them.

Member discussion